CHAPTER 2: Shared Memory Patterns using OpenMP¶

When writing programs for shared-memory hardware with multiple cores, a programmer could use a low-level thread package, such as pthreads. An alternative is to use a compiler that processes OpenMP pragmas, which are compiler directives that enable the compiler to generate threaded code. Whereas pthreads uses an explicit multithreading model in which the programmer must explicitly create and manage threads, OpenMP uses an implicit multithreading model in which the library handles thread creation and management, thus making the programmer’s task much simpler and less error-prone. OpenMP is a standard that compilers who implement it must adhere to.

The following sections contain examples of C code with OpenMP pragmas. There is one C++ example that is used to illustrate a point about that language.

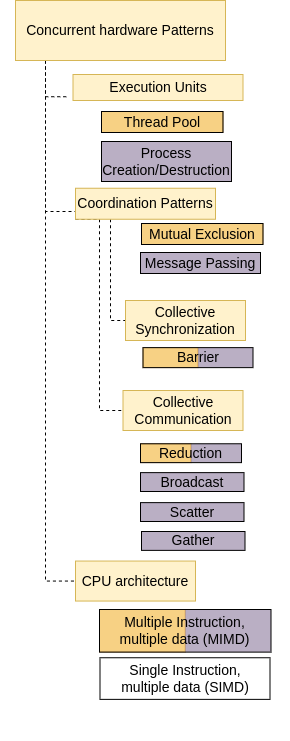

In the previous chapter we introduced these most common Strategy Patterns:

The first four examples in the next section in this chapter are basic illustrations so you can get used to the OpenMP pragmas and conceptualize the two primary patterns used as program structure implementation strategies that almost all shared-memory parallel programs have:

fork/join: forking threads and joining them back, and

single program, multiple data: writing one program in which separate threads maybe performing different computations simultaneously on different data, some of which might be shared in memory.

The rest of the examples illustrate how to implement other patterns along with the above two and what can go wrong when mutual exclusion is not properly ensured. The data structure pattern that is relevant to shared memory machines and OpenMP is the Shared Data pattern, shown in the orange box. You will see examples of how to share variables among all threads and how to indicate when you do no want a variable shared among all threads, but instead keep a private copy in each thread.

Recall these concurrent hardware strategies mentioned in the previous chapter:

Note that by default OpenMP uses the Thread Pool pattern of concurrent execution units. OpenMP programs initialize a group of threads to be used by a given program (often called a pool of threads). These threads will execute concurrently during portions of the code specified by the programmer. In addition, the multiple instruction, multiple data pattern is used in OpenMP programs because multiple threads can be executing different instructions on different data in memory at the same point in time. The other concurrent hardware patterns that are relevant for shared memory programming with OpenMP are illustrated in sections of this chapter: Barrier, Reduction, and Mutual Exclusion.

All of the code examples in this chapter were written by Joel Adams of Calvin University.

- Shared Memory Program Structure and Coordination Patterns

- 0. Program Structure Implementation Strategy: The basic fork-join pattern

- 1. Program Structure Implementation Strategy: Fork-join with setting the number of threads

- 2. Program Structure Implementation Strategy: Single Program, multiple data

- 3. Program Structure Implementation Strategy: Single Program, multiple data with user-defined number of threads

- 4. Coordination: Synchronization with a Barrier

- 5. Program Structure: The Conductor-Worker Implementation Strategy

- Data Decomposition Algorithm Strategies and Related Coordination Strategies

- Patterns used when threads share data values

- 9. Shared Data Algorithm Strategy: Parallel-for-loop pattern needs non-shared, private variables

- 10. Helpful OpenMP programming practice: Parallel for loop without default sharing

- 11. Reduction without default sharing

- 12. Race Condition: missing the mutual exclusion coordination pattern

- 13. The Mutual Exclusion Coordination Pattern: two ways to ensure

- 14. Mutual Exclusion Coordination Pattern: compare performance

- 15. Mutual Exclusion Coordination Pattern: language difference

- Task Decomposition Algorithm Strategies