Task Decomposition Algorithm Strategies¶

Some threaded programs have some form of task decomposition, that is, delineating which threads will do what tasks in parallel at certain points in the program. We have seen one way of dictating this by using the conductor-worker implementation strategy, where one thread does one task and all the others to another. Here we introduce a more general approach that can be used.

16. Task Decomposition Algorithm Strategy using OpenMP section directive¶

This example shows how to create a program with arbitrary separate tasks that run concurrently. This is useful if you have tasks that are not dependent on one another. However, more sophisticated examples could have some dependence, such as producer-consumer problems.

In this example we know there are 4 separate tasks, so we added the clause num_threads(4) to the pragma.

Summary Overview¶

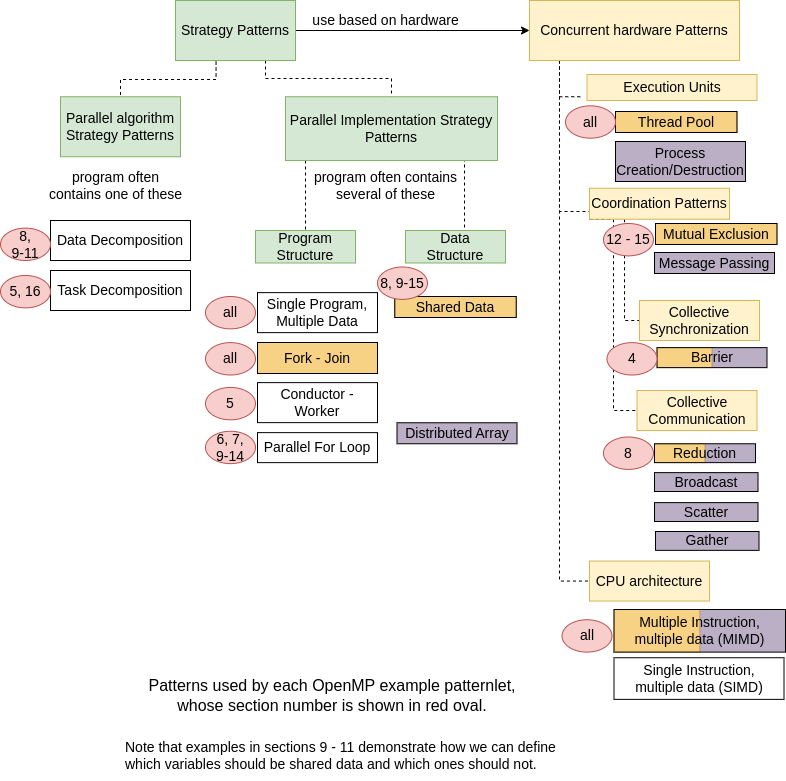

This final patterlet example is now added to the diagram under Program Structure Implementation Strategy Patterns as Task Decomposition. We also added the example 5 that used the Conductor-Worker pattern as also being a type of task decomposition.

Note

You might be wondering where the box on the lower right, SIMD architecture, applies. Another parallel computing device, GPU cards, have this type of architecture. We provided a few code examples in the PDC for Beginners book to introduce these and the CUDA programming laguage that goes with them. In future chapters and books we will return to examples for programming on GPUs.