Collective Communication Patterns¶

With independent, often distributed processes, there is a need in many program situations to have all the processes communicating with each other, usually by sharing data, either before or after independent simultaneous computations that each process performs. Here we see simple examples of these collective communication patterns.

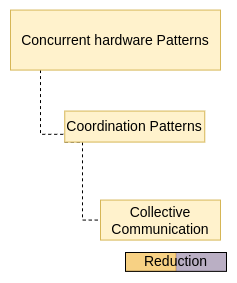

12. Collective Communication: Reduction on individual value¶

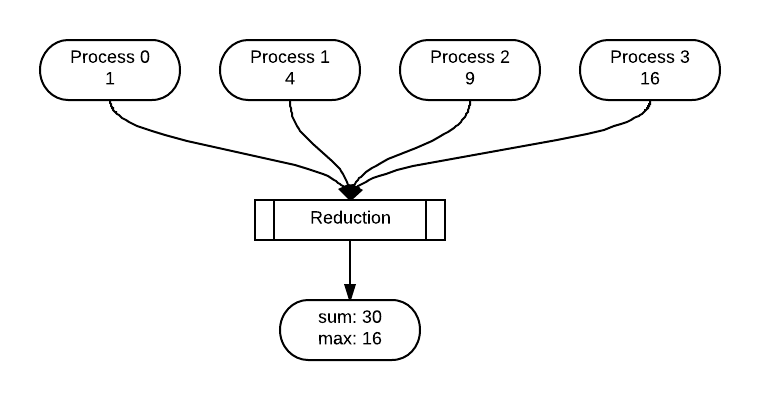

Once processes have performed independent concurrent computations, possibly on some portion of decomposed data, it is quite common to then reduce those individual computations into one value. This example shows a simple calculation done by each process being reduced to a sum and a maximum. In this example, MPI, has built-in computations, indicated by MPI_SUM and MPI_MAX in the following code. With four processes whose computed value for the variable square is shown in each oval, the code is implemented like this:

To do:

Try varying the number of processes using the -np flag. Do the results match with your understanding of how the reduction pattern works for multiple processes?

13. Collective Communication: Reduction on array of values¶

To do:

Can you explain the reduction, MPI_reduce, in terms of srcArr and destArr?

Further Exploration:

This useful MPI tutorial explains other reduction operations that can be performed. You could use the above code or the previous examples to experiment with some of these.

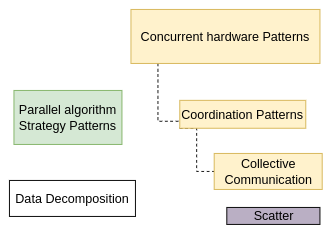

14. Collective communication: Scatter for data decomposition¶

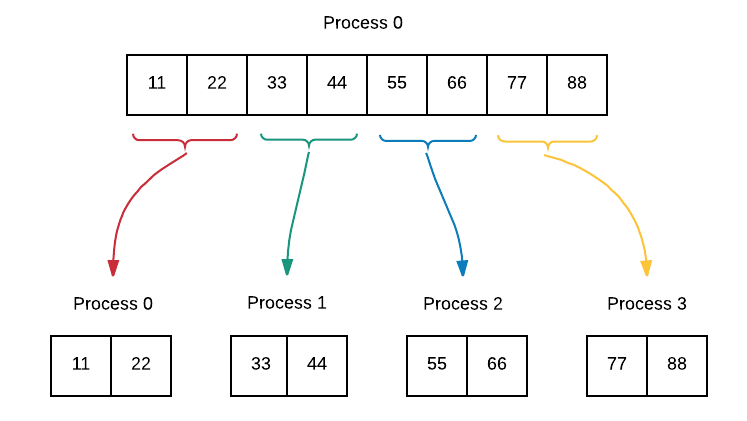

If processes can independently work on portions of a larger data array using the geometric data decomposition pattern, the scatter pattern can be used to ensure that each process receives a copy of its portion of the array. Process 0 gets the first chunk, process 1 gets the second chunk and so on until the entire array has been distributed.

To do:

Find documentation for the MPI function MPI_Scatter. Make sure that you know what each parameter is for. Why are the second and fourth parameters in our example both numSent? Can you explain what this means in terms of MPI_Scatter?

Run with varying numbers of processes from 1 through 8 by changing the -np flag. What issues do you see for certain numbers of processors?

What happens when you try more than 8 processors?

Be certain that you follow the results shown and can match them to the code example.

To Consider:

What previous data decomposition pattern is this similar to?

Warning

If you tried running this as suggested above, you can see that there are circumstances where scatter does not behave as you might intend. In other code that you write, you should add more checks to ensure that the size of your array and the number of processes will result in correct behavior.

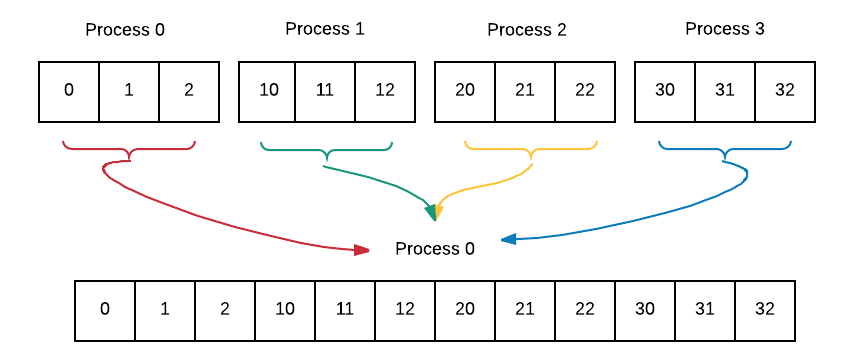

15. Collective communication: Gather for message-passing data decomposition¶

If processes can independently work on portions of a larger data array using the geometric data decomposition pattern, the gather pattern can be used to ensure that each process sends a copy of its portion of the array back to the root, or conductor process. Thus, gather is the reverse of scatter. Here is the idea:

To do:

Find documentation for the MPI function MPI_Gather. Make sure that you know what each parameter is for. Why are the second and fourth parameters in our example both SIZE? Can you explain what this means in terms of MPI_Gather?

Run with varying numbers of processes by changing the -np flag.

Be certain that you follow the results shown and can match them to the code example.