Collective Communication Patterns in MPI: Broadcast¶

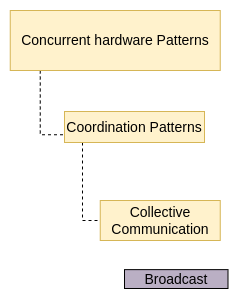

There is often a need for all the processes to share data by ensuring that all of them have a copy of the same data in the memory of their process. This form of communication is often done with a broadcast from the conductor to all of the worker processes. The part of the full patterns document that the examples in this section illustrate is on the left.

08. Broadcast: a special form of message passing¶

This example shows how a data item created by the conductor process can be sent to all the processes.

In order to send the data from process 0 (the conductor by convention) to all of the processes in the communicator, it is necessary to broadcast. During a broadcast, one process sends the same data to all of the processes. A common use of broadcasting is to send user input to all of the processes in a parallel program. In our example, the broadcast is sent from process 0 and looks like this:

Note

In this single-program, multiple data scenario, all processes execute the MPI_Bcast function. The fourth parameter dictates which process id is doing the sending to all of the other processes, who are waiting to receive.

To do:

Notice the arguments to the MPI_Bcast function. Study them carefully and find additional documentation about this function.

Run this code several times and also with several processes (8 - 12). What do you observe about the order in which the printing occurs among the processes? Is it repeatable or does it change each time you run it?

09. Broadcast: incorporating user input¶

We can use command line arguments to incorporate user input into a program. Command line arguments are taken care of by two variables in main(). The first of these variables is argc which is an integer referring to the number of arguments passed in on the command line. argv is the second variable. It is an array of pointers that points to each argument passed in, which are strings of chars.

For MPI programs, after the MPI_Init function is called, the flags for argc and argv are updated to contain only the command line arguments to the program itself.

We modified the previous broadcast example to include an additional command line argument, an integer. Instead of reading a scalar value from a file, this allows a user to decide what value is broadcast in the program when it is executed.

To do:

Try running the program without an argument for the number of processes (remove ‘-np 4’).

What is the default number of processes used on the system when we do not provide a number?

10. Broadcast: send receive equivalent¶

This example shows how to ensure that all processes have a copy of an array created by a single conductor process. Conductor process 0 sends the array to each process, all of which receive the array.

Note

This code works to broadcast data to all processes, but this is such a common pattern that the MPI library contains the built-in MPI_Bcast function. Compare this example to the following one to see the value in using the single function.

11. Broadcast: send data array to all processes¶

The send and receive pattern where one process sends the same data to all processes is used frequently. Broadcast was created for this purpose. This example is the same as the previous example except that we send the modified array using broadcast.

To Consider:

Observe the second argument to MPI_Bcast() above. This indicates a total number of MAX (third argument) contiguous elements from array (first argument) of type MPI_INT (third argument) will be sent from process 0 (fourth argument) to all other processes.

For further exploration

These examples illustrate a common pattern in many parallel and distributed programs: the conductor node (very typically rank 0) is the only one to obtain data, often read from a file. It is then responsible for distributing the data to all of the other processes. MPI also has defined collective operations for file reading and writing that can be done in parallel. This is referred to as parallel IO. You could investigate this topic further. Your system will need to support it to take advantage of it.

In the next section we will look at other forms of collective communication patterns.