7.2 OpenMP code with gcc and pgcc¶

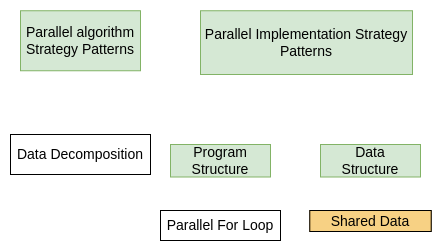

This simple addition of two arrays uses parallel patterns that have occurred already in this book: Data decomposition using the parallel for loop implementation strategy. What we see new here is that the threads will compute over shared data in arrays.

Now let’s parallelize this code using the shared memory multicore CPU and each compiler: gcc and pgcc. What this illustrates is that the new pgcc compiler will compile the same OpenMP code into a threaded version, provided we give it particular compiler arguments to indicate that we want OpenMP.

Note

Note that each of these examples includes the same code from the previous section for command line argument functions and helper functions. In both versions, the main function and the CPUaad function are the same: the number of threads has a default value of 1 and is overridden by the command line, and the CPUadd function has the OMP pragma. The only difference between the two is how each is being compiled. You can see the arguments to the compiler below the code in each example.

Another feature to note is that we have added the use of the OpenMP function omp_get_wtime(), placing it around the vector addition functions call in main to enable us to time the code.

Lastly, we have increased the default size of the arrays that we will be adding together, so that the threads have a bit more work.

Command line and helper functions¶

The gcc version¶

The new addition to this code is the pragma for OpenMP added to the CPUAdd. We can also set the number of threads to use on the command line. If you run it as is, you will see printing that tells you which threads were working on which loop iteration.

Note

In OpenMP, we can debug and see the decomposition of the loop iterations to each thread by using omp_get_thread_num() inside of the parallel block of forked threads that is created with the #pragma omp parallel for construct shown in the CPUadd function below. Unfortunately, there is no function corresponding to omp_get_thread_num() in the OpenACC standard.

Exercise

Try varying the number of threads: Remove the ‘-n’, ‘10’ from the command line arguments and note that it uses a much larger array size and reports a time. Then try each of these: [‘-t’, ‘2’] and [‘-t’, ‘4’] and [‘-t’, ‘8’] to see how you gain some improvement in the running time.

The pgcc version¶

The following code is exactly the same as above, except that the compiler options are different for building OpenMP code with the pgcc compiler.

Exercises

Try varying the number of threads: Remove the ‘-n’, ‘10’ from the command line arguments and try each of these: [‘-t’, ‘2’] and [‘-t’, ‘4’] and [‘-t’, ‘8’] to see how you gain some improvement in the running time.

What do you notice about the running times of these two versions of code from different compilers under the same conditions?

Change the compiler optimization level to -O3 for the gcc version. What do you notice about the difference in times for 2 threads? Note that with the pgcc compiler, we can try to use that level of optimization, but it does not change the run time.

Note

We experiment with different compiled versions of code here to demonstrate that there are differences in code that is produced and that you will want to try such experiments on your applications to see what will work best for them. It may not be the same as these results you see here, but hopefully we are motivating the need to experiment to see what you get.